No one wants their site to crash.

This article is based on a talk I recently gave.One article from TheStreet on the cost of downtime cites a 2019 Black Friday in which Costco suffered performance issues for 16 hours, during which they lost an estimated 11 million dollars in sales. That's $11.5k/minute!

I imagine that the real cost to them was probably double that.

Beyond sales, there's a high level of stress an event like this would cause.

All hands on deck would mean stopping everything else, which means paying people to focus on the emergency instead of whatever else they might have been doing.

If they needed a scapegoat, they might also suffer the cost of employee replacements. I'm sure it caused a dip in morale as well.

And what comes after Thanksgiving shopping? Christmas shopping. Everyone was probably on high alert until after Christmas, working to make sure they didn't have a repeat event.

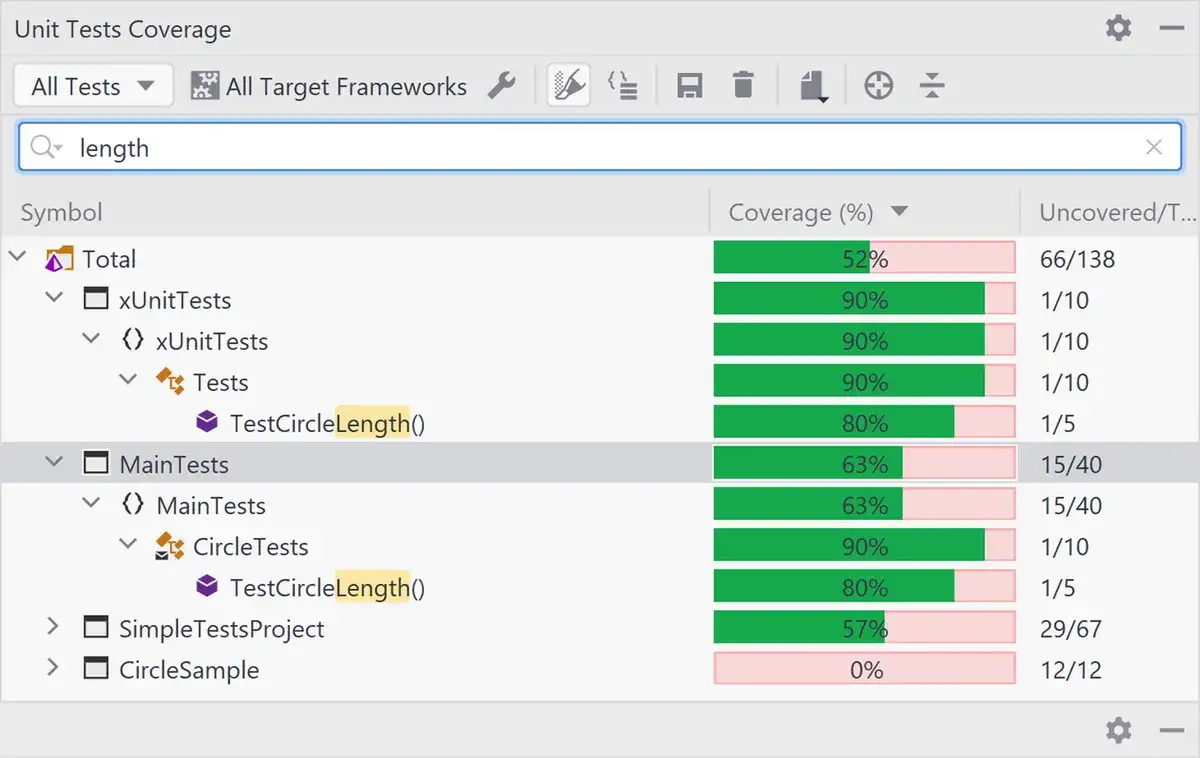

As developers, we employ tools to ensure code quality, like unit testing.

Unit tests validate that our code works like we expect it to every time the code runs. Tests can also define brittle points with code coverage. Hopefully, these tests catch errors during the build phase.

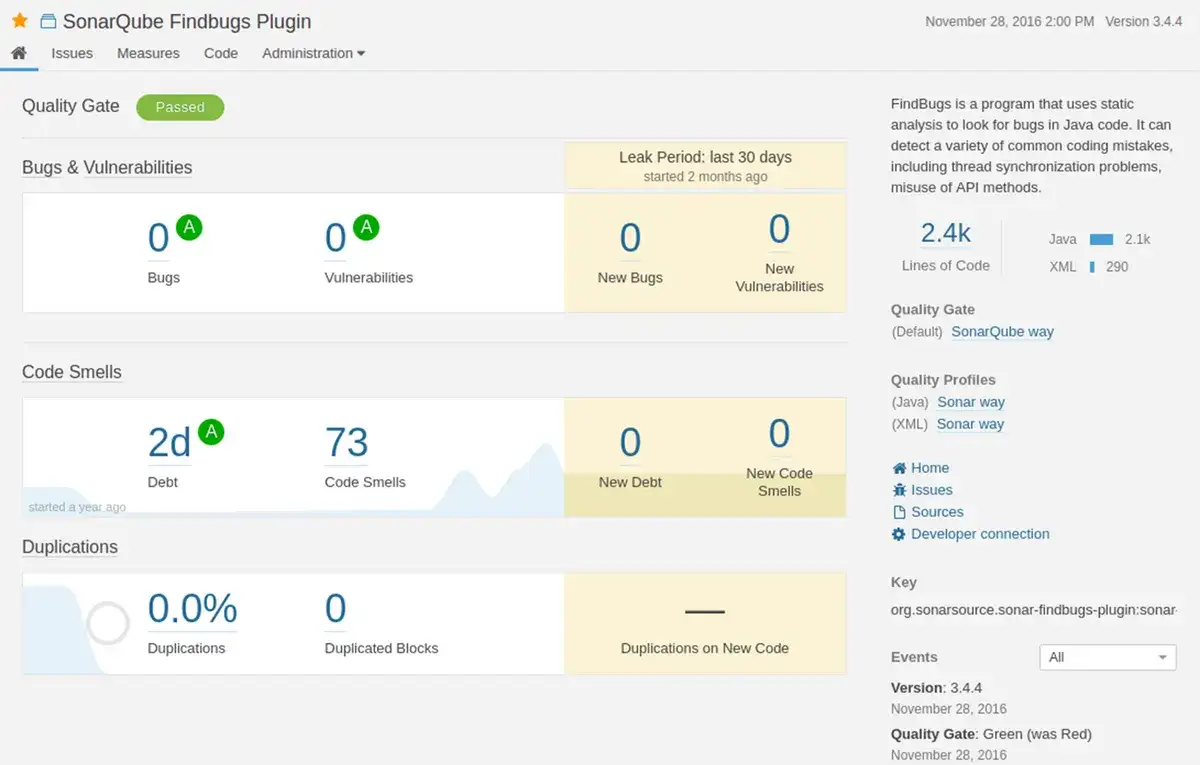

We can also use code smell tools like Sonar Cloud. SonarCloud makes it easy to scan the code base for things that will be difficult to understand by some else (or ourselves) in the future, and it can show places where we've introduced potential bugs. Both tools can help prevent us from checking in breaking changes before they get deployed.

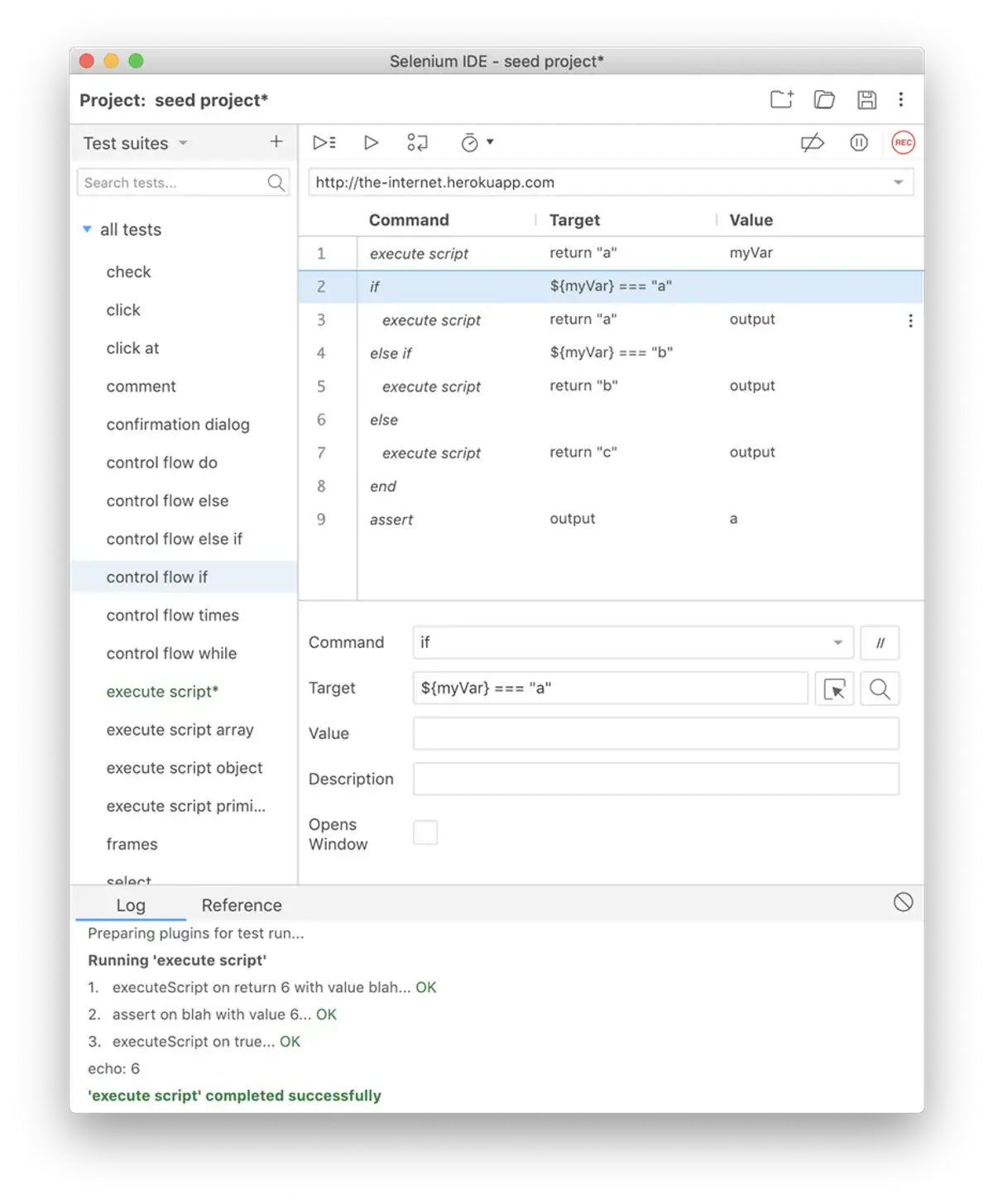

After deployment, we can use tools like Selenium to validate our UI and user flows.

Even with those tools in place and their code base was great, Costco still might have had performance issues. It's pretty easy to test that it works on my machine or that it works on the QA teams machines.

In the case of Black Friday and other major shopping dates, the sheer volume of users might be our downfall.

How do we mitigate this risk? Enter load testing. We can use load testing to simulate lots of users making simultaneous calls to our API or other backend services. How well does our auto-scaling work? Wouldn't you rather know beforehand instead of waiting to sort of "test in prod"?

JMeter

JMeter is a popular, free, OSS, load testing tool. It uses XML files as instruction sets, much like how we'd describe our unit tests or selenium suites. The JMeter UI tool allows us to easily define what URL we're testing and how many users we want to simulate. For example, Costco might have a shipping rate API that takes the user's order and calculates their shipping cost based on the number of items, weight, and destination. We can craft these requests with JMeter's UI tool into a Test Plan. We can also specify the number of users, duration, and user ramp up.

I got a sample NodeJS and MongoDB app from Azure to demonstrate this.

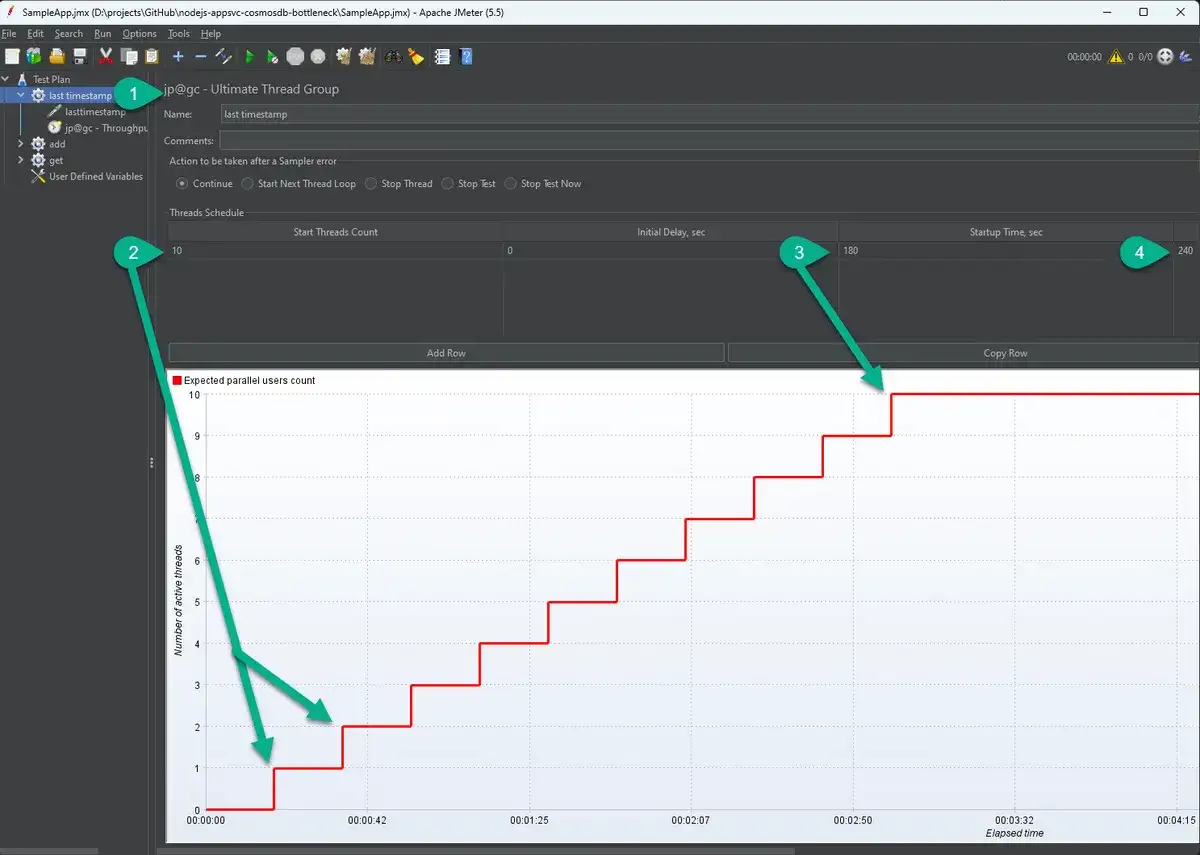

Here's the test plan for a sample app. You can see that there are different thread groups. This first one is for the "last timestamp" end point (1). It's expected to have 10 threads (2) ramping up over 3 minutes (3) to run for a total of 7 minutes (4).

The HTTP Request describes things like protocol (1), server address (2), port, execution path (3) and any parameters (not shown). You'll notice that the server name (2) is a variable. We can configure this under the "User Defined Variables" which makes it easy to update this in a single place and will be important later.

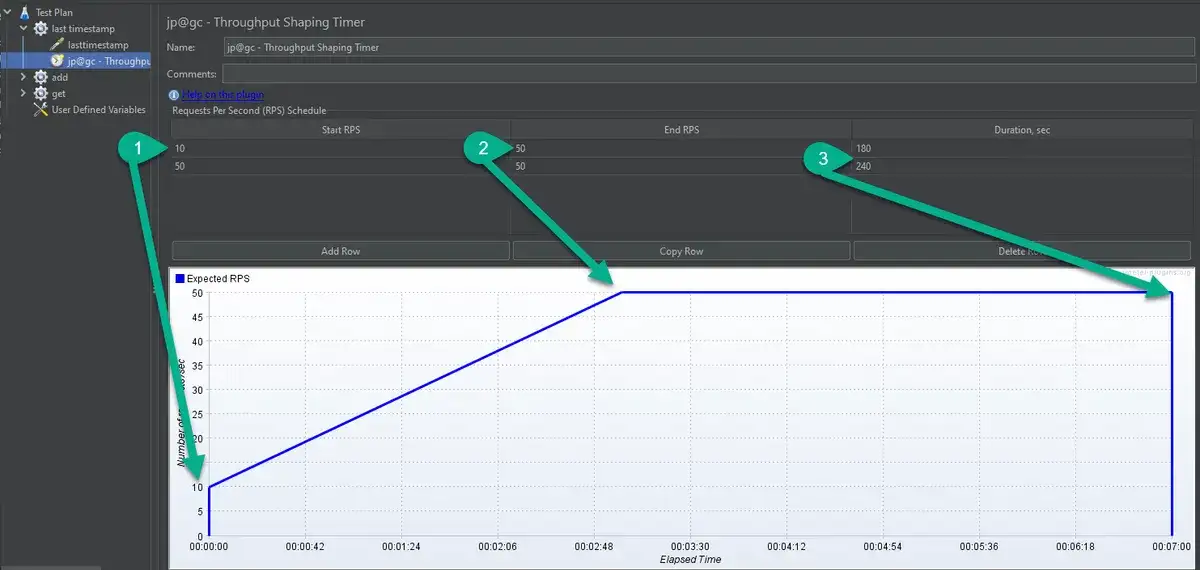

This example also uses another plugin to ramp up the number of requests per second. From 10 (1) up to 50 (2) for a total of 7 minutes (3).

Once the test plan is built, we can run the CLI tool and see how our endpoints perform.

That's all well in good, but this is the DevOps group. How do we automate this?

Azure Load Testing

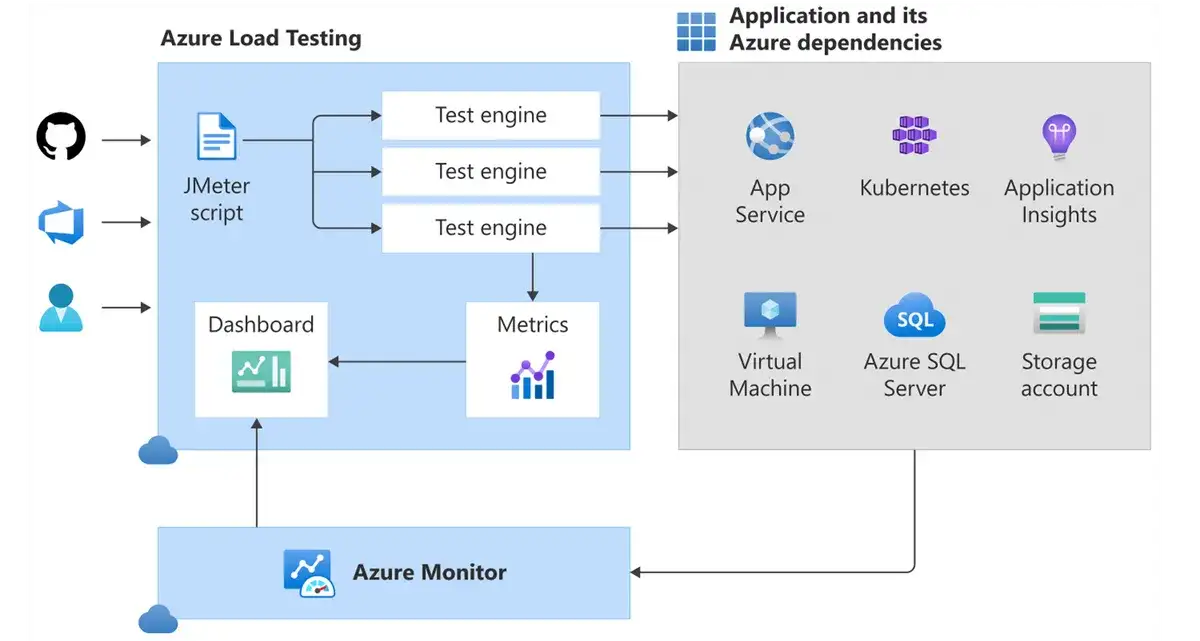

Oh, look! Azure has a preview service called Azure Load Testing or as it's known on the street "How much effort does it take to crash your site". How about that. It uses JMeter files to run the load tests.

A word of caution here. This service is in preview (as of this writing) and cost $10/m per instance even if you don't use it.

As I mentioned, I'm using a sample node and MongoDB app from Azure that simulates some bottlenecks and generates errors. I deployed this app, created a load test instance, and uploaded the JMX file.

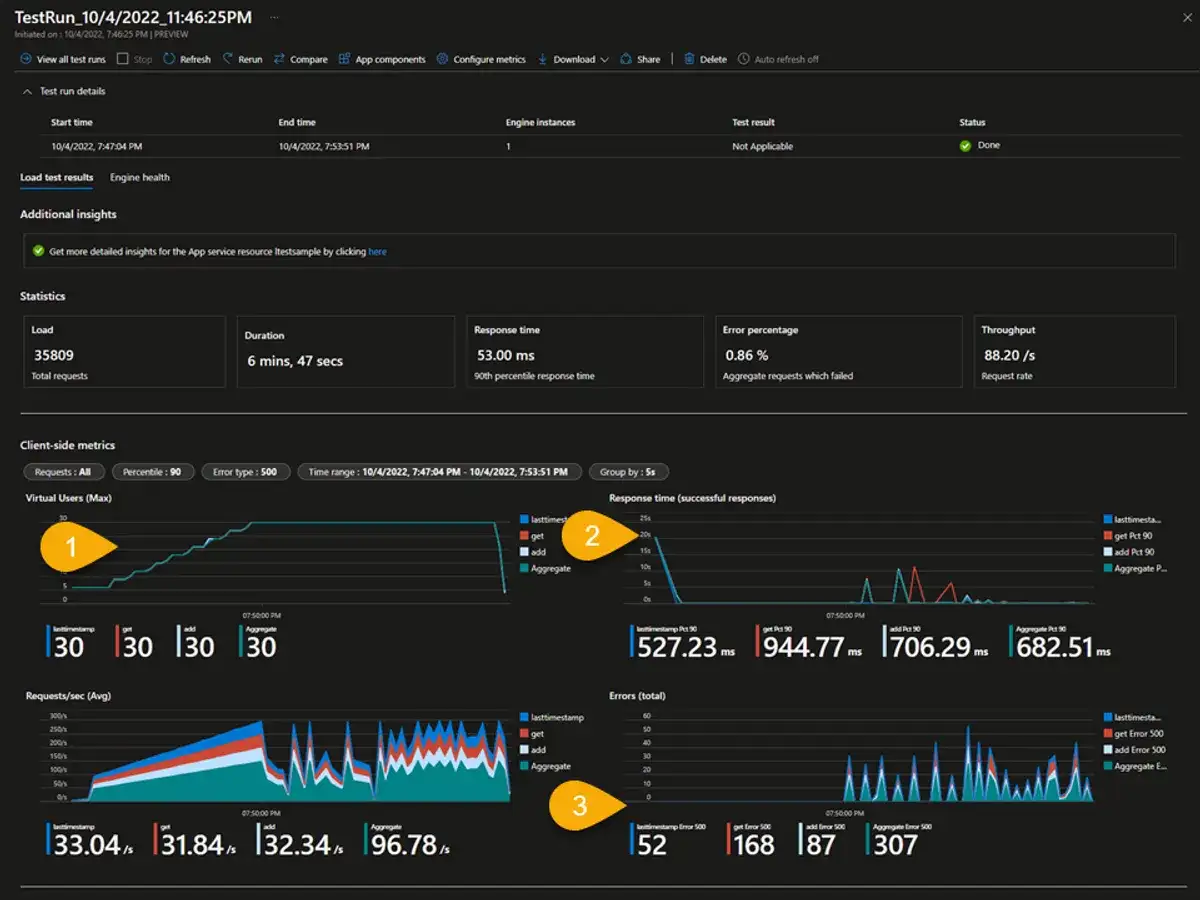

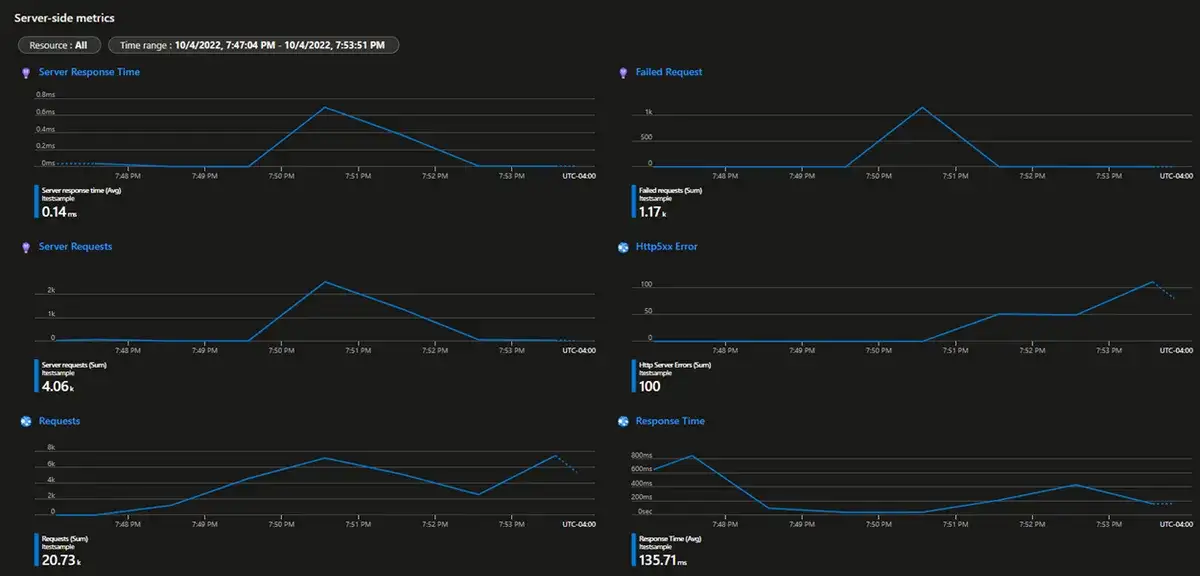

You can see from this test I already ran that it provides quite a bit of details. We can see things like the user ramp up over time (1), response time (2) and the number of errors (3).

We can also add our app components like Application Insights, the CosmosDB, and the app service plan.

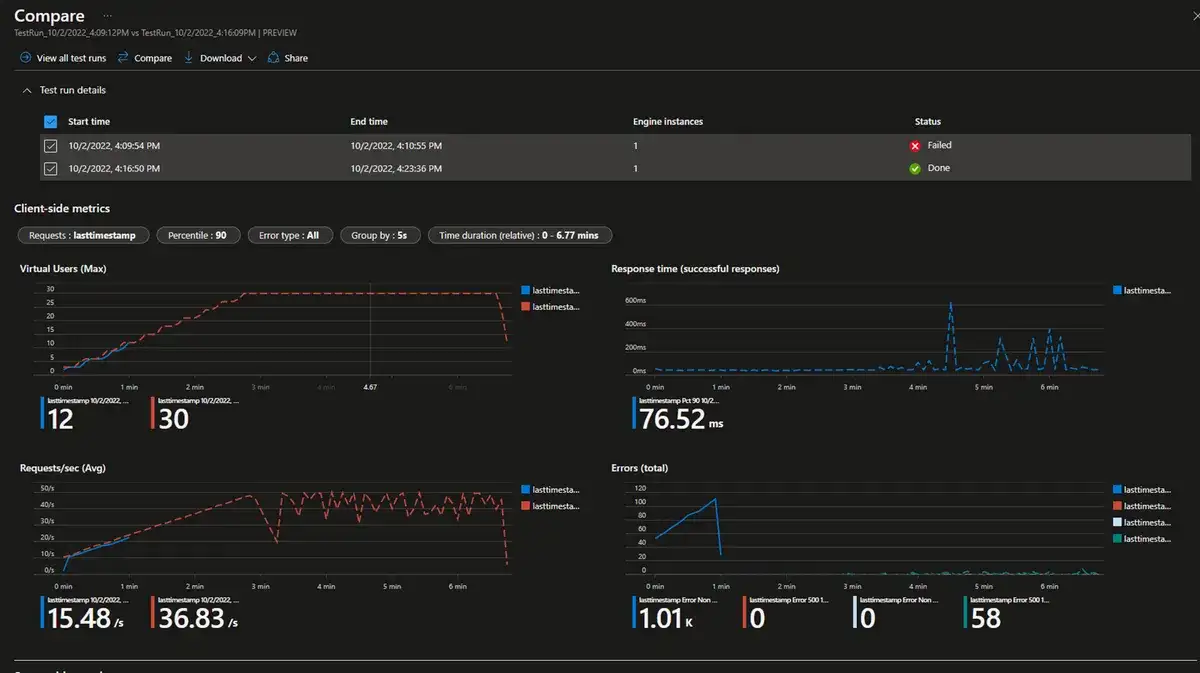

You can also compare the results across multiple runs. The red and blue in the first chart are two different runs. The blue one failed and ended the test early.

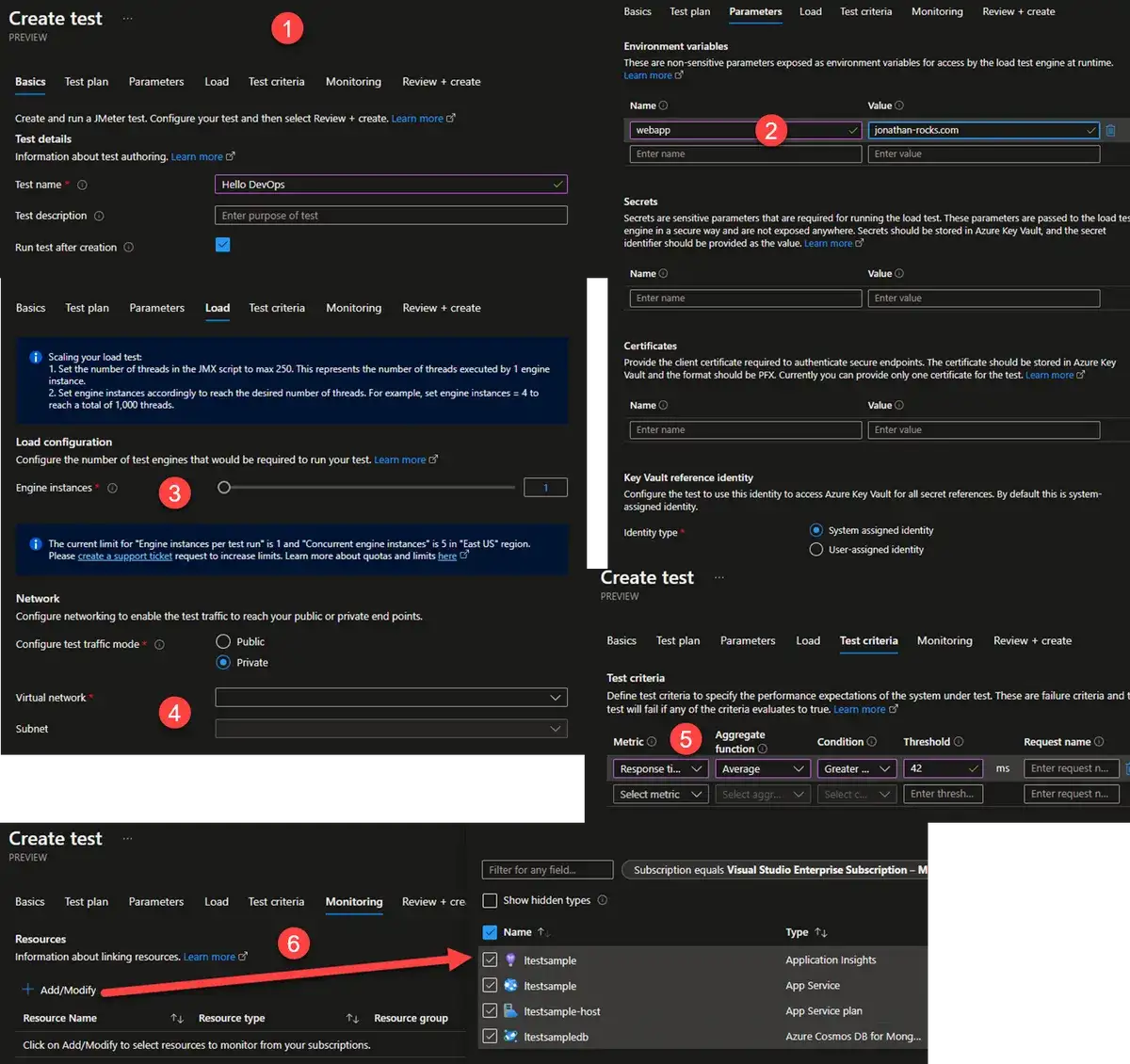

I won't complete creating a new test, but I did want to highlight a few things.

- We can give the test a name

- In the parameters section, we can overwrite variables we defined in the JMX file. We can also get secrets from a Key Vault.

- We can customize the number of instances we have in the Load section. The network section is interesting. With private networking selected you can integrate with a VNet and if needed a public IP address resource. Enabling this allows your traffic to come from a known set of IP addresses, which would make testing outside resources easier instead of looking like a DDoS

- We can specify Test criteria. These criteria will cause the test to fail if it meets any of these items.

- Here's where we can add our service-side resources. These can be added after the test is created as well.

- This can also be part of both GitHub Actions and Azure DevOps pipelines. If I was doing this for a client, I'm not sure how I would implement the CI/CD part of this. On the one hand, you would want to test your production setup because otherwise you really haven't verified that infrastructure with that particular deployment. On the other hand, if there was a bottleneck, you could send your resources into a bad state and cause problems for your actual users.

Here's what the part of pipeline looks like.

- stage: LoadTest

displayName: Load Test

dependsOn: Deploy

condition: succeeded()

jobs:

- job: LoadTest

displayName: Load Test

pool:

vmImage: ubuntu-latest

steps:

- task: AzureLoadTest@1 #requires a plugin: https://marketplace.visualstudio.com/items?itemName=AzloadTest.AzloadTesting

inputs:

azureSubscription: $(serviceConnection)

loadTestConfigFile: 'SampleApp.yaml'

resourceGroup: $(loadTestResourceGroup)

loadTestResource: $(loadTestResource)

env: |

[

{

"name": "webapp",

"value": "$(webAppName).azurewebsites.net"

}

]

- publish: $(System.DefaultWorkingDirectory)/loadTest

artifact: resultsI removed some code from the code block above to highlight the one part of it that is interesting to us, which is the Azure Load Test task. Here it's looking for the test config file and a list of environment variables. In the environment variables, we're overwriting the base URL I mentioned previously.

I hope this look into Azure Load Testing was helpful, and it gave you some ideas for your own clients. What did you think?